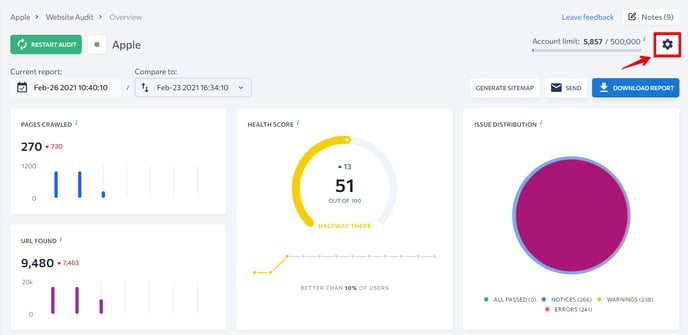

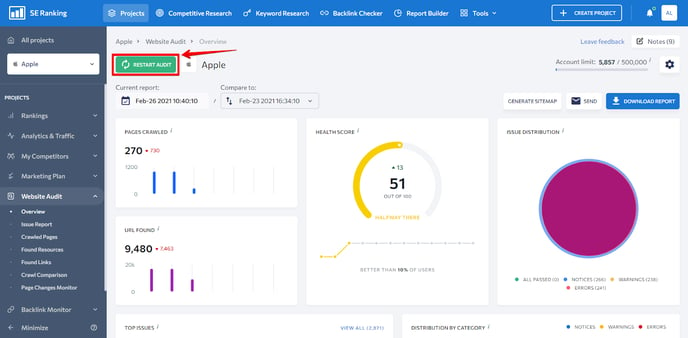

With the help of flexible settings, you can fully control the entire process as well as the audit results. Settings can be accessed from any section of the tool.

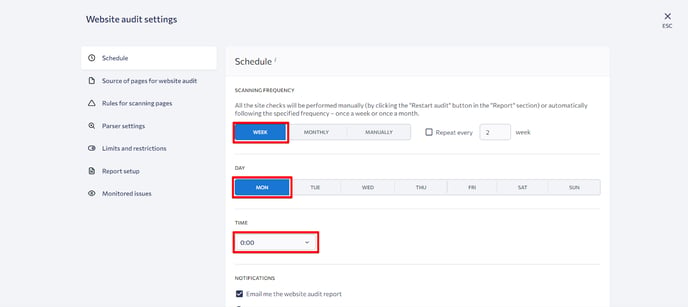

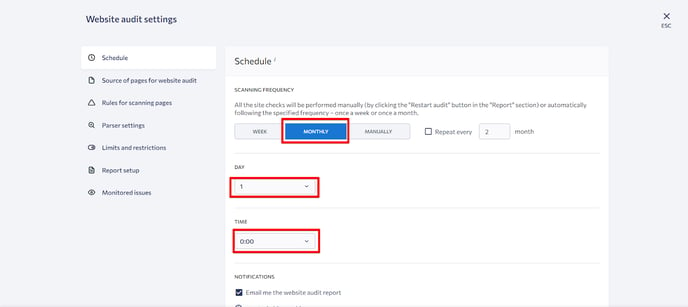

Schedule

Under this settings block, you can decide how often you want to audit your website.

The following options are available:

1. Manually. If you select this option, you will have to click on the Restart audit button in order to start the audit. For convenience, this button can be found in any section of the tool.

2. Weekly. The audit will run on a weekly basis on the day of the week and time of your choice.

3. Monthly. The audit will run on a monthly basis on the day of the week and time of your choice.

The time can be set only in the GMT time zone.

However, you can always start the audit manually even if you have set up automatic checks.

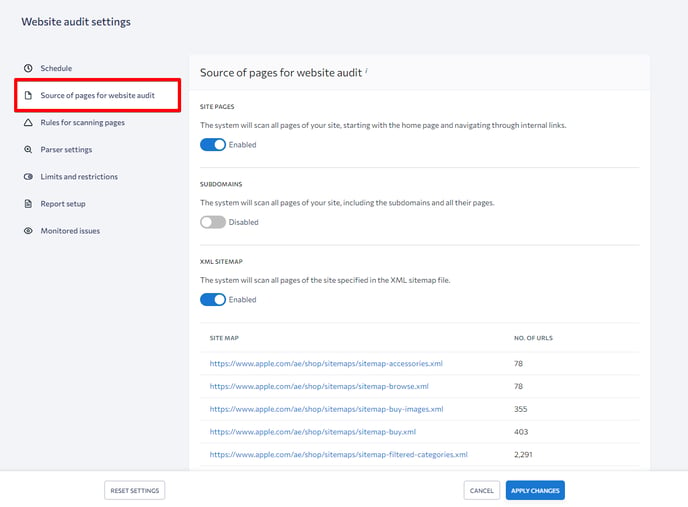

Source of pages for website audit

Under this settings block, you can let the tool know which pages you want to audit.

- Site pages. The tool will crawl every page of the site, starting from the homepage and following every internal link, not including subdomains.

- Subdomains. The tool will crawl every page of the site, including subdomains and their pages.

- XML sitemap. The tool will crawl every page specified in the XML sitemap.

After the first audit, the tool will automatically find the XML sitemap given that it’s located at domain.com/sitemap.xml. You can also add a link to the XML sitemap manually by clicking on the Add sitemap button. Once the link has been added, be sure to save your changes. - My list of pages. You can add your own list of web pages to be audited. Upload a file in a TXT or CSV format. Every URL address must be written on a new line.

Before starting your site audit, you can specify which path you want to scan. The following options are available:

- Specify one or more directories for scanning (allow paths).

- Specify one or more directories to exclude from scanning (disallow paths).

- Specify one or more directories that will not be included in the report.

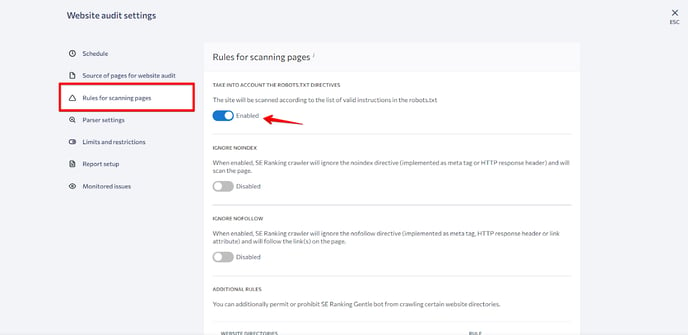

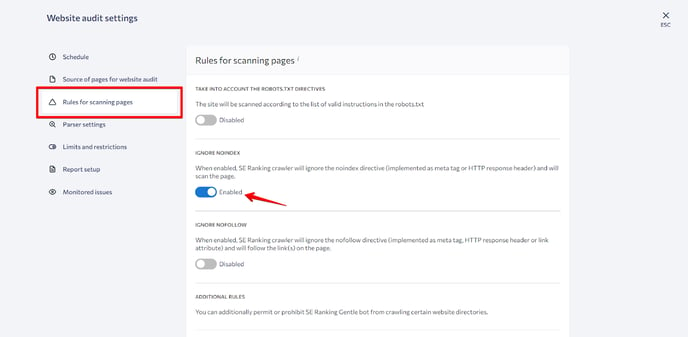

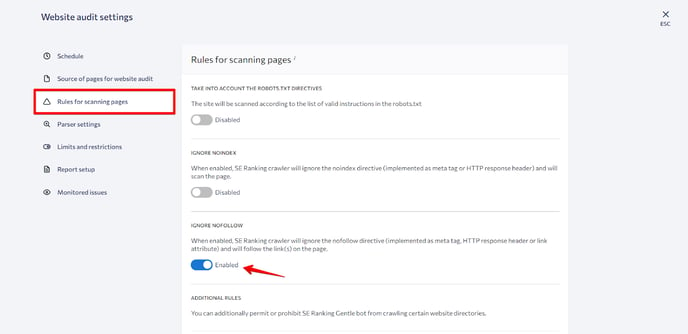

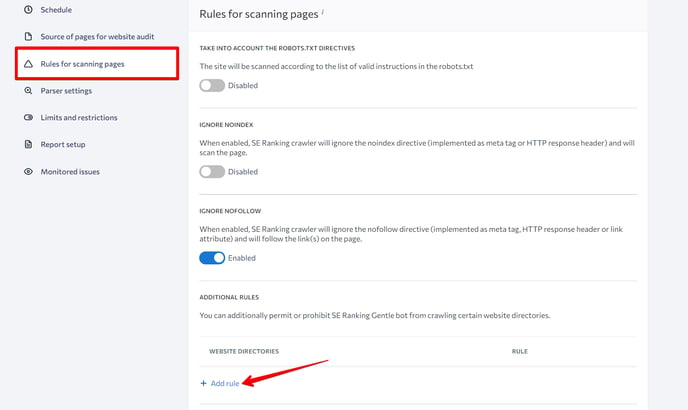

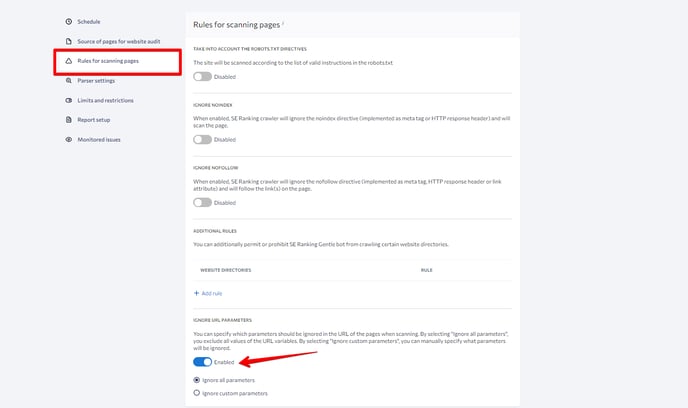

Rules for scanning pages

Under this settings block, you can set rules for crawling site pages.

For example:

1. Take robots.txt directives into account. When this option is enabled, the tool will crawl the site according to the list of valid instructions in the robots.txt file. Otherwise, the tool will ignore robots.txt file instructions.

2. Ignore Noindex. When this option is enabled, the tool will crawl pages ignoring the Noindex directive (in the format of a meta tag or an HTTP response header).

3. Ignore Nofollow. By enabling this option, you instruct the SE Ranking bot to follow the links on the page ignoring the Nofollow directive (in the format of a meta tag, an HTTP response header, or a link attribute).

4. Additional rules. Using this option, you can additionally allow or block the SE Ranking bot from crawling specific directories of the site. To do this, click on the Add rule button.

4. Additional rules. Using this option, you can additionally allow or block the SE Ranking bot from crawling specific directories of the site. To do this, click on the Add rule button.

5. Ignore URL parameters. Here you can specify which UTM tags should be ignored in page URLs during the audit. You can either exclude every parameter or manually select the ones to ignore.

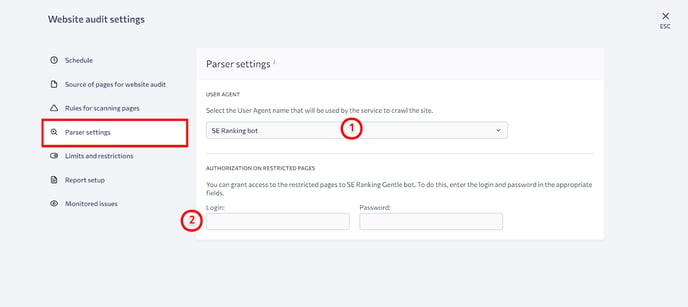

Parser settings

The tool allows you to select a bot that will do the crawling, plus it provides access to pages that are closed off to search robots.

- Select a User Agent.

- Authorization on restricted pages.

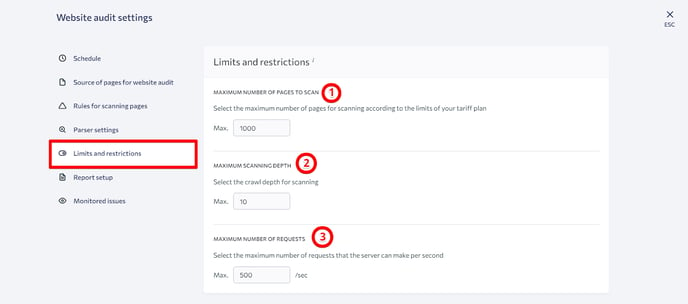

Limits and restrictions

This section allows you to configure crawling limits and restrictions. You can set separate limits and restrictions for each site under your account.

Here are the available settings:

- The maximum number of pages to scan. Choose the number of pages according to your subscription plan.

- Maximum scanning depth. Select the crawl depth.

- Maximum number of requests. Depending on the capabilities of the server, you can increase the number of requests the server makes per second to speed up report generation. Alternatively, you can decrease it to reduce the server load. By default, the optimal number is set to 5 requests per second.

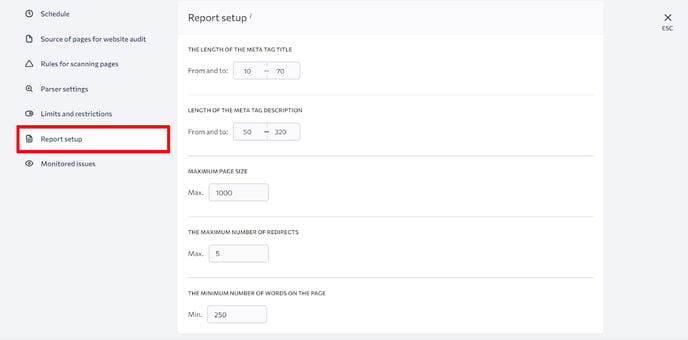

Report setup

When analyzing website parameters, SE Ranking looks to current search engine recommendations. Under the Report setup section, you can change the parameters that are taken into account by the tool when crawling sites and putting together reports.

What’s more, we have introduced a new feature that allows you to create guest links for the audit reports created on the SE Ranking platform. Thanks to these shareable reports, presenting information to the specialists you work with has become much easier. This feature is available in both the stand-alone tool and project-based audits.